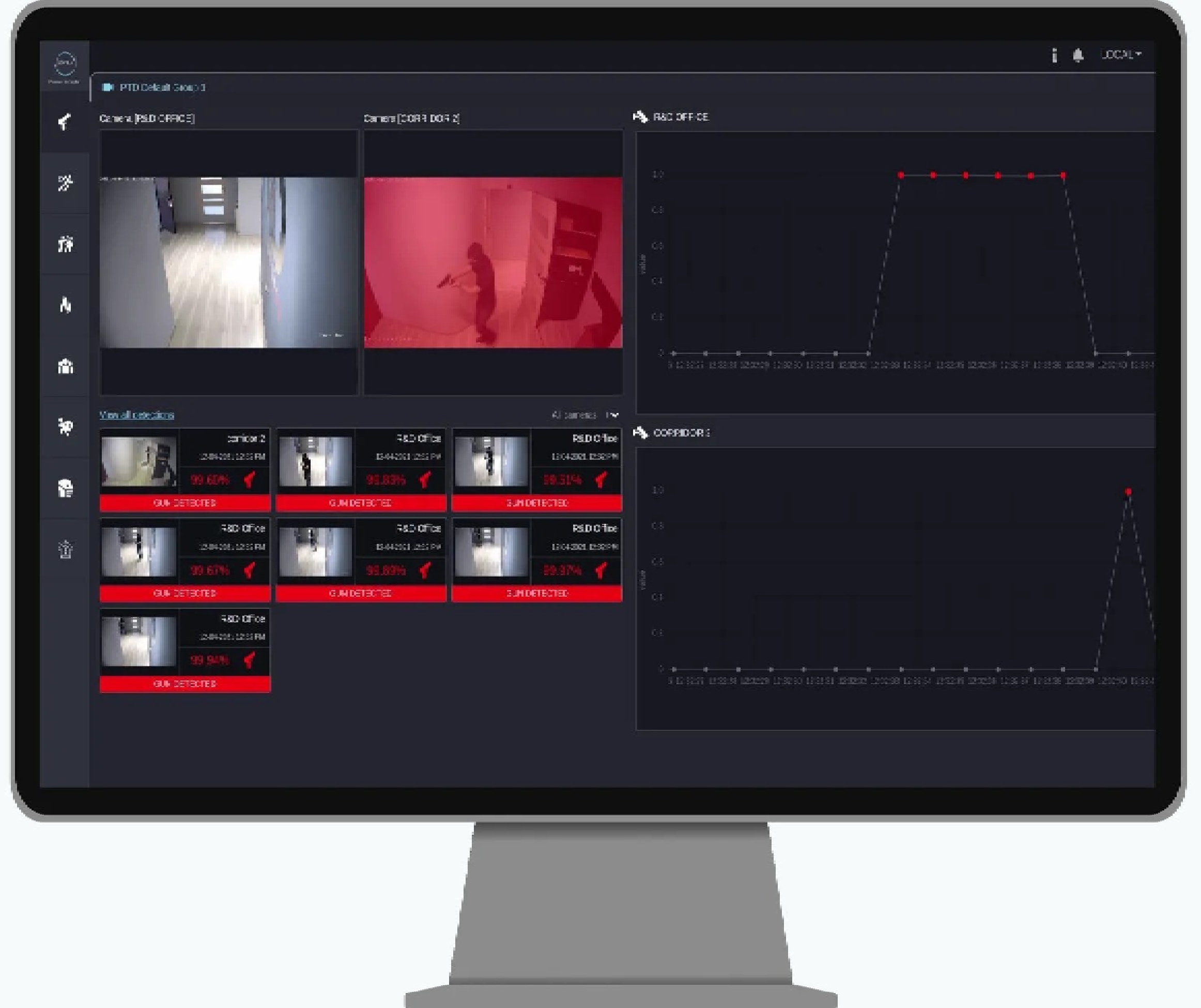

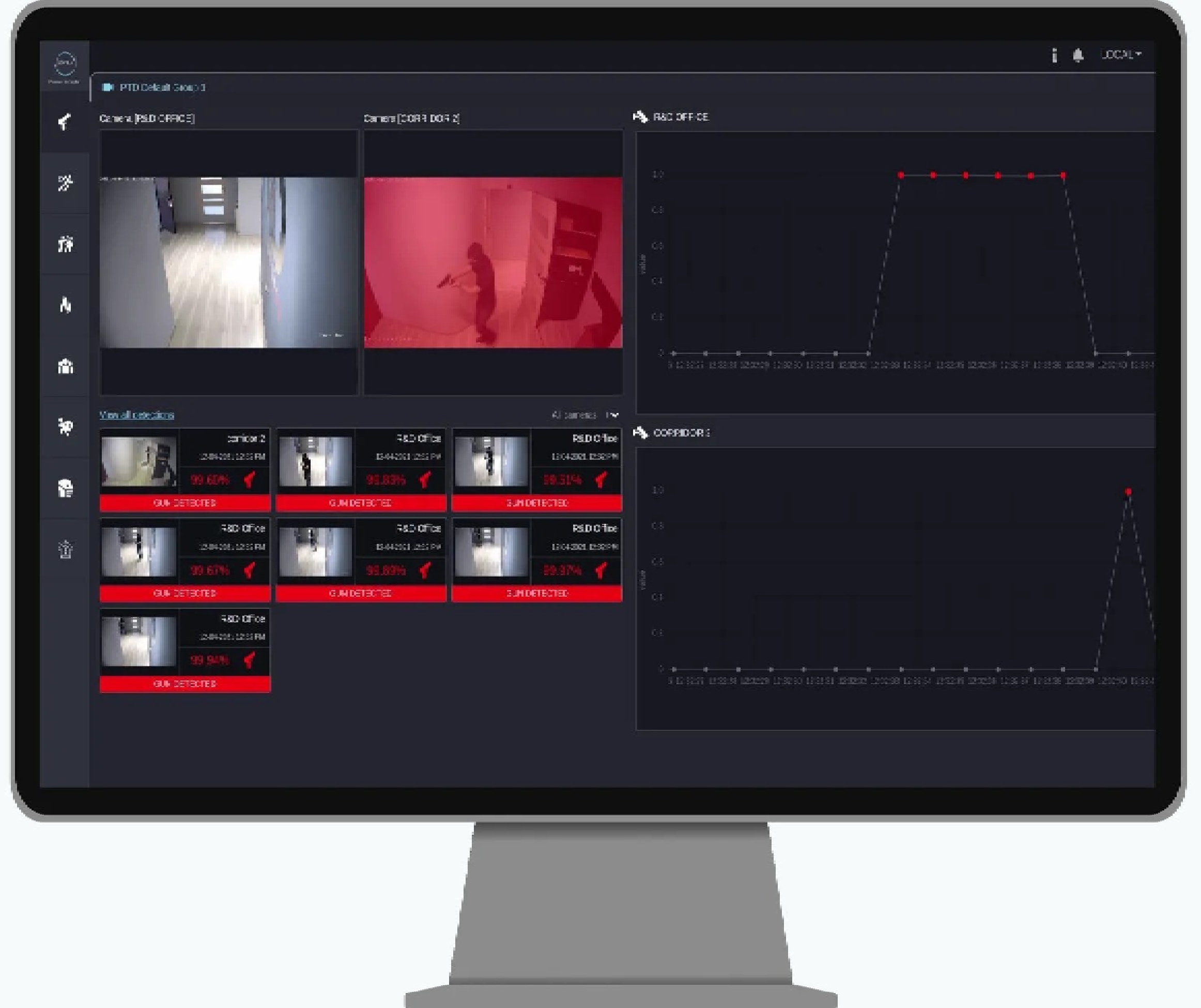

Preventive Threat Detection

AI-powered proprietary object detection and classification engine augments your security infrastructure and provides real time situational awareness.

From 2013 to 2019 the frequency of mass shootings increased by 65 percent. Gun and object detection are at the core of Preventive threat awareness and identification. Preventive Threat Detection (PTD) through Gun and Object Detection actively identifies and detects objects and patterns that are potential threats.

We utilize your existing security infrastructure and transforms it to a proactive security system searching for potential threats. Our proprietary object detection and classification engine strengthens your current security posture and provides stronger awareness of threats to your environment.

From 2013 to 2019 the frequency of mass shootings increased by 65 percent. Gun and object detection are at the core of Preventive threat awareness and identification. Scylla’s Preventive Threat Detection (PTD) through Gun and Object Detection actively identifies and detects objects and patterns that are potential threats.

Scylla utilizes your existing security infrastructure and transforms it to a proactive security system searching for potential threats. Our proprietary object detection and classification engine strengthens your current security posture and provides stronger awareness of threats to your environment.

How It Works

Our proprietary object detection algorithm is trained to detect a number of specified objects in a single or multiple frames. The initial detection is fast. It then forwards the region of interest for more accurate assessment to the Charon classifier.

Charon carefully examines the suspected object and validates the threat. Our Object Detection System provides an impressively low false positive rate without missing objects of interest.

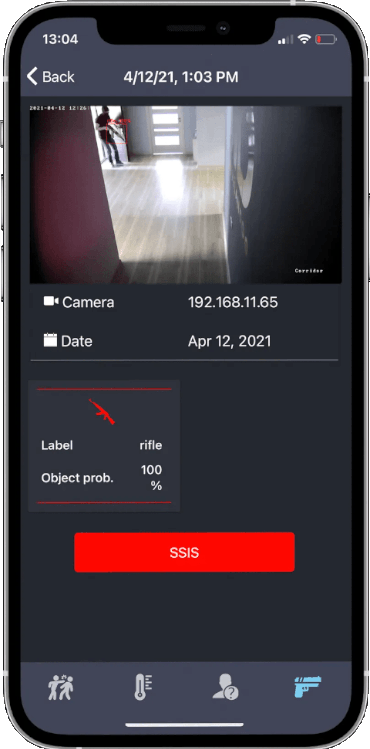

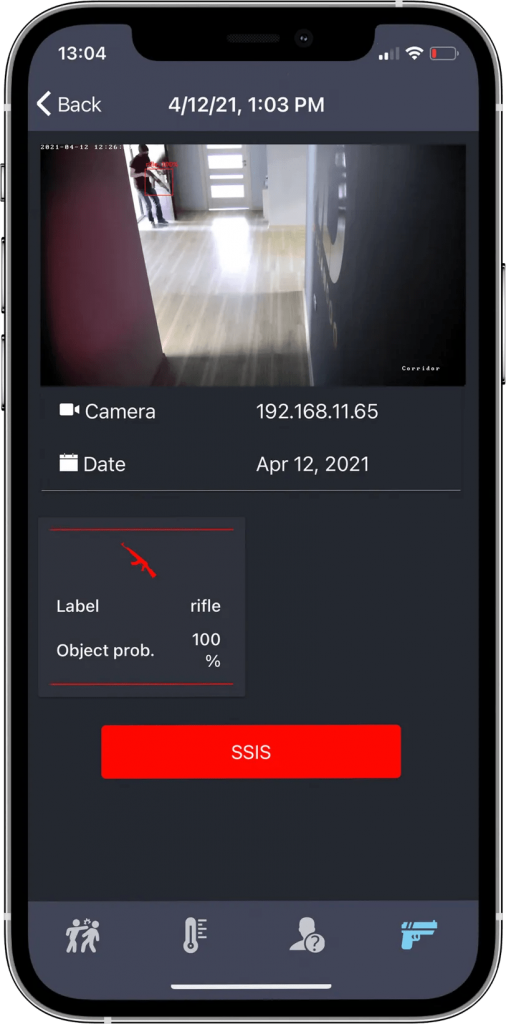

An alert is then triggered and distributed to the end user through web, mobile or integrated VMS channels along with the time, location and screenshot of the detection.

The object detection is unaffected by the background scene or motion and can accurately analyze the content of video streams from stationary and moving cameras.

Hardware consumption of our Object Detection System is much lower compared to similar systems on the market.

How It Works

Scylla’s proprietary object detection algorithm is trained to detect a number of specified objects in a single or multiple frames. The initial detection is fast. It then forwards the region of interest for more accurate assessment to the Charon classifier.

Charon carefully examines the suspected object and validates the threat. Scylla Object Detection System provides an impressively low false positive rate without missing objects of interest.

An alert is then triggered and distributed to the end user through Scylla web, mobile or integrated VMS channels along with the time, location and screenshot of the detection.

Scylla object detection is unaffected by the background scene or motion and can accurately analyze the content of video streams from stationary and moving cameras.

Hardware consumption of the Scylla Object Detection System is much lower compared to similar systems on the market.

AI supports

The system is trained to detect and identify a wide variety of suspicious objects, weapons and personal protective equipment.

Weapon detection

Rifle

Gun

Knife and more

Shotgun

Personal protective equipment monitoring

Mask and more

Helmet

Scylla AI supports

The system is trained to detect and identify a wide variety of suspicious objects, weapons and personal protective equipment.

Weapon detection

Rifle

Gun

Knife and more

Shotgun

Personal protective equipment monitoring

Mask and more

Helmet

What makes Our Object Detection System stand out

AI methodologies work autonomously 24/7 and self-improve

Can be seamlessly integrated with most of cameras and VMS

Effectively operates on cameras with moving backgrounds such as drones and body cams

7 times lower hardware requirements compared to similar solutions on the market

Can be deployed both on-premise or on cloud

What makes Scylla Object Detection System stand out

AI methodologies work autonomously 24/7 and self-improve

Can be seamlessly integrated with most of cameras and VMS

Effectively operates on cameras with moving backgrounds such as drones and body cams

7 times lower hardware requirements compared to similar solutions on the market

Can be deployed both on-premise or on cloud

FAQ

Can Our Object Detection System work effectively outdoors?

Yes, the models are trained on a wide variety of sceneries and backgrounds, with varied illumination and views. Essentially the solution is agnostic towards the background – as long as the object of interest is visible, the system will detect it.

What is the maximum detection distance?

The answer to this question depends on a number of factors. To begin with, it relies on the camera characteristics and the resolution, in particular. Resolution initially plays a big role, however, the incorporated zooming-tracking algorithm allows us to check the object in the original resolution the camera uses. Thus, unlike similar AI security solutions, we are not heavily dependent on the quality of the visuals that is usually downgraded when processed through neural network platforms.

Then there is a group of characteristics that can be related to camera “picture quality”, such as stream bandwidth, encoding, etc. There are conditions of visibility to consider as well, such as the illumination, position (see Question 6 on object angle) and the pixel size of the object. The latter linearly depends on the distance from the camera and can be used for distance limit estimates. For example, the reliable minimum size limit for a gun object is around ~15-17 pixels which results in the maximum distance of up to 10-12 meters for most HD cameras.

What’s the detection response time?

The detection typically happens in the first 400 ms (in some cases, up to 2 seconds). When assessing the response time, take into account that most IP cameras used nowadays show some sub-second lag of the video stream. Also in the cases when the system is deployed on the cloud, the time lag that takes place when the stream reaches the cloud and the response reaches back the dashboard has to be considered.

How does Our Object Detection System help law enforcement?

Our Object Detection System is designed to help security units by supporting their daily operations, augmenting their capabilities and eliminating possible human-factor related flaws. Also, in case of a possible threat the alert that is sent out by the system is enriched with information crucial for quick and inclusive analysis of the threat on site and effective planning of dedicated counteractions.

What is the detection response time?

Typically less than a second. In cloud deployments the response time can slightly increase depending on the client’s upload speed.

Can it detect a weapon if someone's carrying it in a bag?

The system is based on computer vision algorithms and detection of the threat is based on visual answer analysis. This means that to detect a weapon in a bag our system has to be attached to X-Ray or millimeter-wave scanning devices. Running on CCTV cameras that operate in visual range only, the Object Detection can detect only non-concealed weapons.

Should the object be at an angle so that the system can detect it?

No, the system is trained on all possible angles of objects of interest. Of course, in some specific cases the angle of the object would matter as the features that the system uses to classify the object are more distinct in some angles than others. For instance, if a gun/rifle is held at an angle towards the camera, more distinct features are visible compared to the cases when they are pointed directly at the camera.

What does the system do after detection?

An alert containing all the crucial information is compiled and delivered to end users responsible for security. There are number of customizable alerting pathways: dashboard, mobile alerting application, access point relay boards and VMS alerting API.

How many cameras can Object Detection System be integrated with?

The limit here is only the hardware that runs the system analytics. More specifically, the main deciding characteristic is the GPUs the servers are equipped with. Other than that, Object Detection system can simultaneously accept video streams from different cameras with different characteristics.

Can ACT Object Detection work on the cloud?

Yes, all solutions provided by ACT can be deployed both on the cloud and on premise. Moreover, our solutions are cloud provider agnostic, as long as the cloud instance runs Ubuntu 18.04 and is equipped with a Nvidia GPU card.

Is Object Detection System GDPR and CCPA compliant?

Absolutely. ACT does not store any information (unless requested specifically by the user).

What is the definition of a true and false alarm?

An alert is classified as a true alarm when the prediction of AI corresponds to reality (i.e. the object of interest is correctly identified, the action sought after is detected, etc.). A false positive is a case when the alert is triggered by mistake. Unfortunately, due to the essentially probabilistic nature of AI, the latter are inevitable in most cases.

However, due to the elaborate AI and machine learning behind Object Detection System, it can meet any level of production-grade industrial standards. Moreover, we are continuously improving ACT modules where they are retrained on mistakes to make sure the number of false alarms goes even further down with time.

What are the recommended camera parameters?

The Object Detection System is camera agnostic. Most questions on the limitations and camera requirements end up receiving a simplified “rule-of-thumb” answer – if a human can see and identify the object of interest, then ODS AI will also be able to do that (and in some cases, will even outperform a human due to the integrated zooming and re-checking algorithms). As for the minimal camera parameters, these will depend on each use case and the object of interest you are trying to detect. Of course, the camera should have a digital output or at least be connected to a DVR that has one.

ACT Intrusion Detection and Perimeter Protection System can accept pretty much all the variety of stream types, such as RTSP/RTMP, HTTP, etc. Usually the minimum required resolution starts from HD (1280×720) and 5 FPS. Parameters defining the frame/image quality vary from one camera to another, but we recommend looking into such characteristics as bandwidth, encoding, and sharpness, and improving them if necessary.

How long are alerts stored in history?

The duration of alerts depends on the client’s data retention policy. By default, we offer a storage duration of one month, but this period can be configured to correspond to local policies.

Can ACT Object Detection System work on PTZ cameras?

Yes, it can. Our computer vision engine is indifferent towards moving and changing backgrounds and works similar to human vision – if the object is there and it is distinguishable, the system will spots it and reports. Of course, blurring of objects during PTZ shifts should be taken into account.

Can it work in harsh environments?

The Object Detection System is designed to work in challenging environments where cameras with embedded algorithms misperform. The AI engine compensates for the drawbacks imposed by demanding conditions including but not limited to poor illumination, somewhat corrupted frames, environmental factors and effects of weather.

Will it detect when there is a gun on a table?

The Object Detection System is not designed to detect unhandled or holsted weapons. It triggers the alert as soon as the weapon is in possession of a human.

Can Object Detection System work with IR/thermal cameras?

Yes, it can. The maximum detection distance of Object Detection System will depend on the camera characteristics (contrast ratio, pixel crosstalk, etc). But in general, the solution complies with industry standard DRI requirements, i.e. the recognition limit (the distance at which you can determine the class of an object) is ~15 pixels for small weapons.

GET YOUR QUOTATIONS FROM US